Superfast AI 3/20/23

GPT-4, Leaked Meta Model, AI Applications in Finance and Cars, a Piano-Playing Co-Pilot... we have a lot to cover!

Hi everyone, today we’re covering GPT-4, a leaked Meta model, AI applications in finance and cars, a piano-playing co-pilot and more… we have a lot to cover!

Let’s dive in!

🗞️ News

GPT-4

Here’s the TLDR:

GPT-4 is multi-modal, meaning it can accept multiple kinds of input and output.

In this case, it accepts both text and images, and outputs text.

Text is inclusive of code.

Other kinds of inputs/outputs we could see in the future are image outputs, video, and outputs paired with robotics to produce physical results. (see: SayCan and watch a robot transform text → goal generation → cleaning up a spill)

GPT-4 can pass a lot of skills assessments.

Notably, GPT-3.5 went from the bottom 10% of performers on the Law Bar Exam to the top 10% of performers

It produces state-of-the-art results on several major benchmark datasets, including MMLU, HellaSwag and AI2 Reasoning Challenge.

GPT-4 can take a rough image sketch and turn it into a website.

Start at 16:12 in this quick demo:

GPT-4 reverses a notable inverse scaling hypothesis.

Last year, there was an Inverse Scaling Prize competition to surface instances where foundation models got worse on certain metrics as they got larger or more advanced.

GPT-4 was able to reverse the negative effects of scaling in hindsight neglect, which assesses the expected value of taking a bet. It was found that larger models were more likely to select a contradictory answer to the correct expected outcome.

Check out the full page here.

Google’s PaLM API

Google announced API access to PaLM, one of its leading LLMs. PaLM is one of the industry’s leading large language models and has been used in a number of other research projects including SayCan and MedPaLM (which recently set a new SOTA for medical models).

By offering API access, researchers and builders alike will have greater access to conduct research, build applications, and fine-tune on top of the foundation model. Check out the Google page here.

Applications in AI

DuoLingo Max leverages GPT-4 to offer language tutors (link)

Morgan Stanley rolls out ChatGPT to assist their Financial Advisors (link)

GM brings AI assistance to cars (link)

With these new applications rolling out, a few questions that come up for me here:

Will big institutions go straight to foundation model companies to build their AI products?

Who wins the application layer? Startups who can move quickly and hire great talent or legacy companies that have built-in distribution?

What do you think? Reply to this email and drop me a line.

Piano Genius

Want to play piano but too busy to learn? Enter: AI-assisted piano performance (link).

The Google Magenta team created a co-pilot for your piano aspirations. The video is worth a thousand words, so I recommend checking out the demo above. You can also read the summary page here and try out the web demo here!

Another cool musical application from the team at Google Magenta: SingSong. Ever felt like you were destined for American Idol glory but never had the band to back you up? Try SingSong, your own production band that makes your vocals the star of the show. My take: it’s not perfect, but it’s an interesting start down the path of these kinds of applications. Check it out below!

Misc Links

The misaligned behavior of Bing ChatGPT (link)

Open-source vs closed-source LLMs from Stability AI’s CEO, Emad Mostaque:

Can LLMs do math? (link)

Other headlines

Adept raised a $350M Series B at a $1B post-money valuation (link)

Midjourney v5 dropped last week. Here are some awesome examples (link)

Stability AI is looking to raise more money at a $4B valuation (link)

Crazy stat

Bing hit 100M daily active users last week (link)

Roughly a third of Bing's millions of active users are people who weren't using the service before the introduction of AI chat.

(source)

📚 Concepts & Learning

Training and Deployment Costs

Standford Alpaca (link)

Stanford researchers leveraged the Meta LLaMA model to create a model with ChatGPT performance on a $600 budget: $500 to build a synthetic dataset using ChatGPT and $100 for compute costs.

That’s a head-turner for sure!

As a side-note, this is the same Meta LLaMA model that got leaked on 4chan, meaning all the weights were, for a short period of time, publicly available and downloadable online 🤯 In this case, it’s interesting to see a concrete example of how research could progress quickly if proprietary models are made open-source, and how that might further AI progress. On the other hand, the downsides still exist where bad actors will potentially misuse powerful AI… like posting it on a public forum without Meta’s permission.

Definitely cause for some side-eye here. 👀

Bias in AI

Want to understand how OpenAI addresses bias in their models? They use a mixture of feedback from human-labeled datasets as well as engineering techniques to automate that regulation. Check out their quick explainer on how they conduct human reinforcement feedback (link).

They have a short guideline explainer on the topics they regulate, including:

when a user requests inappropriate content (e.g. hate speech, harassment, violence, etc.)

when a user requests information about controversial topics (e.g. who should I vote for in the upcoming election?)

ChatGPT is asked not to take a personal stance, but can describe known viewpoints

when a user requests fake information (e.g. Why did Napoleon want to invade Puerto Rico?)

Improvements can be made in a number of ways. As OpenAI acknowledges, sometimes ChatGPT refuses requests when it shouldn’t, and sometimes it shouldn’t accept requests that it does. Improvements can be made on both fronts in future development.

LLMs also have a tendency to hallucinate or fabricate knowledge about the world. OpenAI acknowledges that feedback from users and human labelers can improve the model on these dimensions.

AI understands theory of mind

In this paper, Stanford researchers dive explore the idea that LLMs have developed theory of mind. Theory of mind is the idea that people can empathize with others. It’s the idea that you can see something from the perspective of another person.

In their benchmark test:

GPT-3 successfully answered 40% of tasks

GPT-3.5 (davinci-003) answered 90% correctly

GPT-4 answered 95% correctly!

GPT-4 is considered to perform at the level a seven year old person would, which is an incredible finding. Dive into the full paper here.

But, haven’t we seen claims of AI sentience before?

In this blog by The Stratechery, we’re reminded of the time Blake Lemoine, a former Google engineer, claimed that Google’s language model had developed sentience. Lemoine with soon let go from Google after the incident.

Theory of mind is one portion of sentient behavior but unlikely to be fully comprehensive. So, what does it mean to be sentient? What signals in other humans or agents would indicate that to us? Are these signals trivially replicable on the surface through mimicry? How do we know that external actions are actually proxies for internal capabilities? Food for thought!

🎁 Miscellaneous

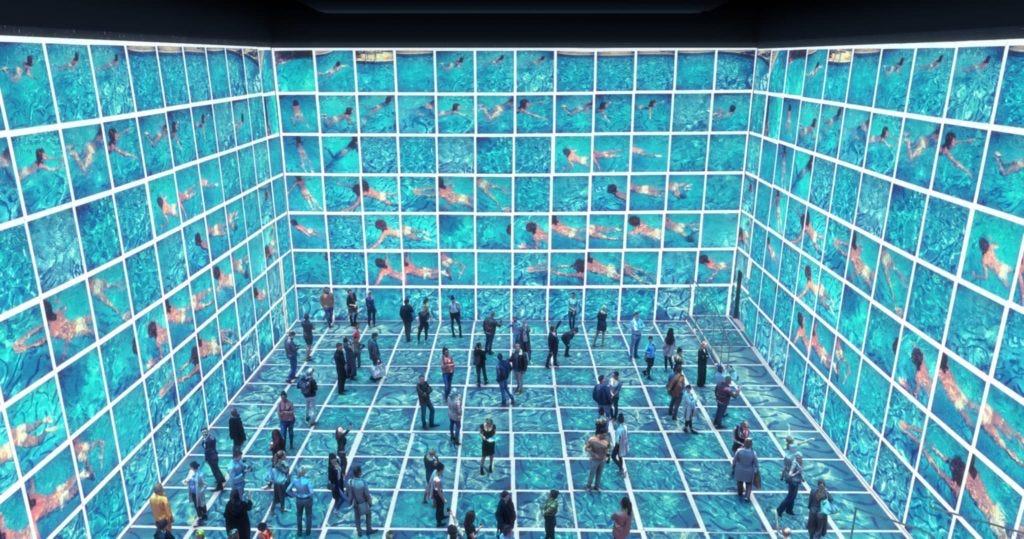

Last weekend, I went to an immersive exhibition of David Hockney’s iconic work. More recently, Hockney has started exploring the art of painting on his iPad. You can see a sample here at the 1:47 mark or here.

What this made me think of was NVIDIA’s draw-to-image generative AI called NVIDIA Canvas. I wonder what Hockney would think of that and if his recent embrace of technology in his artistic journey will ever start to leverage AI?

See a demo of NVIDIA Canvas here:

AI Film Festival

Remember Runway ML hosted an AI-powered film festival in NYC? (link)

Check out one of the winners here:

Here’s a BTS of how the artist created this piece on Runway ML:

What did you think about this week’s newsletter? Send me a DM on Twitter @barralexandra

That’s it! Have a great day and see you next week! 👋